Train Finding Alexa Skill

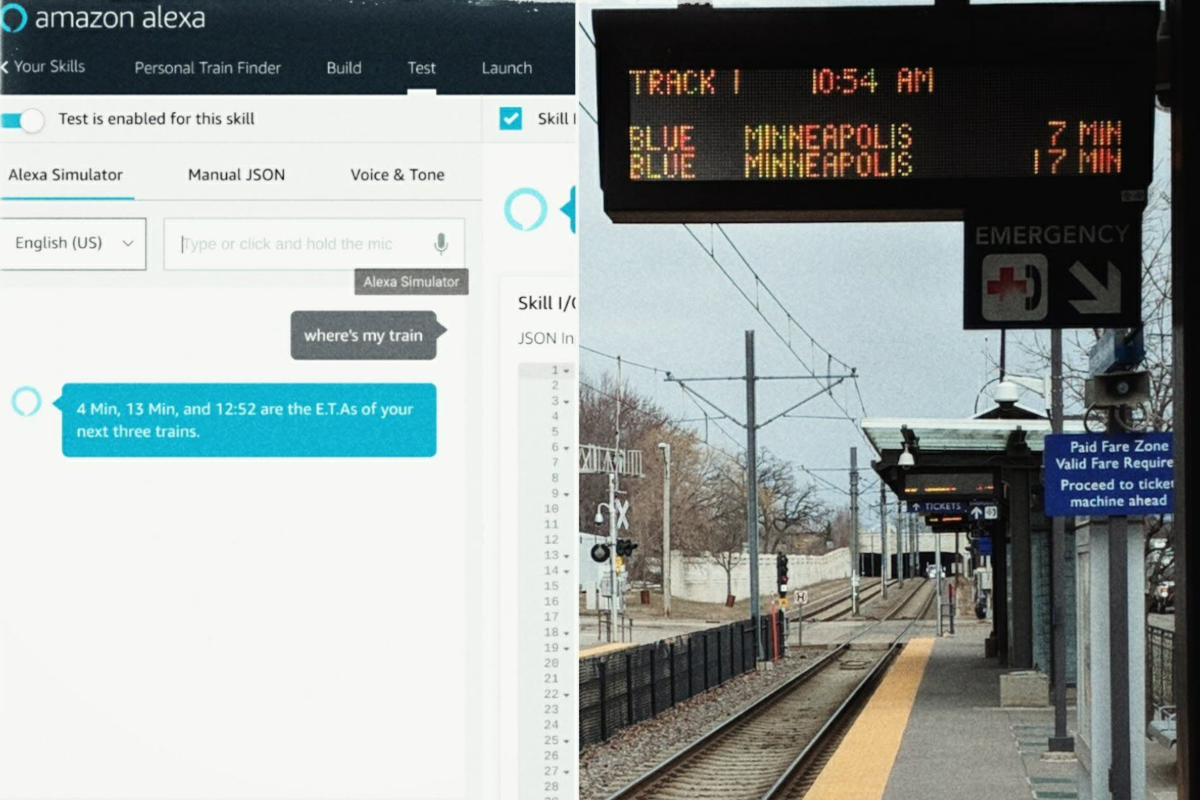

To make my morning commute easier, I created an Alexa skill that grabs real-time train data and tells me when my next three trains are due to arrive at my station.

Train Finding Alexa Skill

To make my morning commute easier, I created an Alexa skill that grabs real-time train data and tells me when my next three trains are due to arrive at my station.

I developed the Train Finding Alexa Skill with Node.js, Amazon Web Services, and Amazon's Developer Console.

This project is inactive; previous progress is compiled below. Their original posts can be tracked via the Train Finder tag.

from: Project Updates, et al: 2018-04-01

Creating an Alexa Skill

Sometime in January, I decided I wanted a better way to time my morning commute to work. A few obsessive weeks later, I have an Alexa skill that uses real-time train data to tell me when my train is arriving at the station.

At the moment, the skill is only available on my development Echo and is hard-coded to my train. Building the skill was fairly easy, aside from the two tough parts noted below.

Tough Part Number 1: "Javascript is asynchronous."

For a while, my Alexa skill was launching and finishing without completing all the tasks I'd written into the code. While looking for possible problems and solutions online, I kept noticing the phrase Javascript is asynchronous, which I definitely ignored for the first few days.

Searching for solutions online is a weird exercise in ignoring and not-ignoring information accurately; the first few times the phrase appeared, it was quite easy to ignore, mostly for two reasons. A) It hadn't come up in my previous dealings with Javascript, and B) the phrase Javascript is asynchronous wasn't accompanied by any additional text that explained its overall importance.

As it happens, "Javascript is asynchronous" was central to the problem I was having.

In hopes of helping someone else in the future, here's the explanation I wish I had seen next to the phrase Javascript is asynchronous.

As a quick and dirty example, let's take a look at the pretend-code below:

Computer, do thing A; //this will take 1 second

Computer, do thing B; //this will take 20 seconds

Computer, do thing C; //this will take 3 seconds

If Javascript weren't asynchronous...

If Javascript weren't asynchronous, the above code would take 24 seconds to process; there would be a 20 second pause while working on thing B.

But Javascript actually is asynchronous

Because Javascript is asynchronous, the above code might process in 3 seconds. Instead of working on thing B for 20 seconds before moving onto thing C, the system gets thing B started and immediately moves on to thing C. (It's our job to come back to thing B when it's done.)

Asynchronousity is a cool feature -- it means something that takes a while doesn't stall your processing. You can think of it like putting a cake in an oven: you wouldn't stand in front of the oven for 30 minutes to watch it bake; you'd get it started, go work on something else, and then come back for it when it's done.

In Javascript, there are certain tasks that always require you to come back when they're done, regardless of how long they take.

For example:

- A task like

What's 1 + 1?might always evaluate immediately. - A task like

Ask this web server what's 1+1is probably fast enough to also evaluate immediately. However, because of the type of task it is (i.e. asking a web server for information) Javascript will always make you go away and come back for the answer, regardless of how long it actually takes.

In my particular example, I was asking a web server for train data. In theory, the task would complete quickly enough that waiting would be fine. (Also, since the train data is the only point of the skill, there was nothing else to do while waiting.) However, I had to go away and come back for the answer,[1] simply because that's how Javascript handles that sort of task.

Tough Part Number 2: Parsing an array of JSON objects

Once I had my train data in hand, I thought I was basically done. The data arrives as an array of individual JSON train objects; arrays are easy enough, JSON formatted data seemed easy enough, I figured I was golden.

I was not golden.

I couldn't find useful instructions on parsing JSON when working with individual instances of JSON objects placed into an array, instead of a single JSON object with internal arrays. The commands are easy enough -- it was the order of operations that was all a tangle,[2] and it led me down a surprisingly existential rabbit hole: What is an array? What is JSON formatted data?? When does an array stop being an array and start being JSON? Can something be an array and JSON at the same time? If I do some JSON parsing on an array, does it cease to be an array and only exist as JSON formatted data?

Because I found lots of instructions on how to deal with arrays inside of JSON objects, but not JSON objects inside of arrays, here's what worked for me:

Step 1

Get your data and smush it together in one long text string.

response.on('data', function(chunk) { stringData += chunk.toString(); }

It might look something like this when complete:

[{"DepartureText":"2 Min","Route":"Blue","RouteDirection":"NORTHBOUND"},

{"DepartureText":"6:33","Route":"Blue","RouteDirection":"NORTHBOUND"},

{"DepartureText":"6:43","Route":"Blue","RouteDirection":"NORTHBOUND"}]

Step 2

Parse the whole thing:

var myTrains = JSON.parse(stringData);

At this point, myTrains now contains an array of parsed JSON objects.

Step 3

Access information about any given train by accessing the correct JSON object in the array and using the appropriate JSON name:

var text = myTrains[0].DepartureText;

About Alexa being dumb

I've long said that Google's Nameless Assistant Thing is smarter than Alexa; now that I've done the tiniest bit of Alexa coding, that feeling makes more sense.[3]

When setting up voice commands for an Alexa skill, no "smarts" are involved on Amazon/Alexa's part. The developer just types out dozens upon dozens of different commands that a user might use with the skill:

- Give me the weather

- Tell me the weather

- What's the weather

- Give me today's weather

- Tell me today's weather

- What's today's weather

If a user says "Alexa, Tell me about today's weather", and the developer didn't think to add a set of permutations that included the word "about", Alexa's not going to figure it out.[4]

For my specific skill, I activate it by asking "where's my train"; if I ask "where is my train", Alexa has no idea what I'm talking about.

In that sense, Alexa isn't intelligent at all, she just relies on lines upon lines of brute force coding.

I've had a chance to do some direct comparisons with Alexa and Google's Nameless Assistant Thing, via a Google Home Mini, and the difference in smarts is striking once you notice it. I'll write about some side-by-side tests next quarter.

A quick note about voice interfaces

One thing about voice interfaces: it's difficult to know "what screen you're on" when there's no screen.

One recent morning, Alexa misheard "Alexa, where's my train?" as something else. I have no idea what she heard, and once I realized she misheard me I cut her off and repeated the command: "No no... Alexa, where's my train??"[5]

After repeating my request I got a slightly confused response from Alexa[6] and she asked for my confirmation that I wanted to change some setting related to tracking Amazon.com packages. I thought Alexa was in one part of a navagation tree; in fact, she was in another. I was essentially pressing buttons on the wrong screen.

With visual interfaces, when I accidentally take a wrong turn or miskey some information, the screen updates and I can see I've made an error. With voice interfaces, when I make an error I perhaps have no idea until I make my next request and, hopefully, get an error message or some other noticeable warning:

User: Text Dan -- "Can't wait to see you tonight, sexy."

Voice: Okay. Texting Dad "Can't wait to see you tonight sexy." Should I send this message or change it?

One of the most important concerns for the user of any interactive system is to know the current state of the system:

- Where am I,

- What can I do from here,

- What am I about to do,

- What have I just done?

Voice interfaces are convenient. Voice interfaces are also less precise than screens... perhaps differently precise, but maybe just less precise. With visual interfaces, a user can specifically triple check, within seconds, that they're texting Dan and not Dad before hitting send; voice interfaces don't afford the same opportunity.

Unfortunately I don't have any brilliant solutions for this problem, at the moment... just something that I'm considering.

from: Projects and Updates, 2018-07-01

Here's the thing with testing voice interfaces... you sound like you're losing your mind while you're doing it:

"Computer, where's my train?

Computer, where's MYYyyyy train?

Computer, where's mahtrain?

Computer, WHERE'Smahtrain?

Computer..."

Voice interfaces and my Alexa skill

Previously on CKDSN: I made an Alexa Skill that tells me when my train is arriving at the station.

Originally, "Where's my train" seemed like a can't-fail option for launching my Alexa skill, since I'm the only user. However, as I've gotten more cavalier when launching the skill, I've started getting some confused responses from Alexa.

- She often thinks I'm saying "Where's my trade?"

- For a while she was asking, So you want to listen to music by Train, right?

- Recently she's been giving me a news report about train delays in Paris.

I'm starting to appreciate the grand difficulty of testing voice interfaces. I talk to Alexa in the shower, with a shirt covering my face, while walking through three different rooms that each have their own device... and I sort of expect Alexa to understand me, no matter what. On the one hand, that's pretty unreasonable. On the other hand, she mostly understands me, no matter what.[7]

That said, an huge growth opportunity for both Alexa and Google's Nameless Assistant Thing is context. I have an Echo Dot dedicated to my train finding skill. It's literally the only thing I ever ask it to do, and I do it every weekday morning. If I had a human dedicated to the same task and they misheard me, (Hey Alex... play some Train,) that human would know I absolutely do not want to hear songs by Train.[8]

I think the biggest context fail for both systems, only because it seems like low hanging fruit, is not using environmental clues to set their voice's volume. If I whisper "Hey Google" or "Hey Alexa", and there's dead silence in the background, that's a great opportunity for the machines to also respond at a reduced volume. (Instead of the volume I was using to blast Lady Gaga, hours prior.)

For the record, using a callback is the way one "comes back for the answer". ↩︎

Specifically, I definitely recall being confused about whether or not I needed to separate the each train out and then parse them individually. Also, at some point, I was getting "Object object" as a result instead of actual train data. ↩︎

That said, confirmation bias is a real thing. ↩︎

In theory you can use regular expressions to help out, but then you'd just have two problems. ↩︎

Note: This seems like a fairly natural reponse. If I ask someone for a spoon and they start to hand me a fork, I'll stop them and repeat the request. ↩︎

She actually displayed some amount of sorry... I'm not sure I understand confusion. ↩︎

My own skill aside. ↩︎

Another human trick: we know when we didn't hear something clearly, which can help us determine if we should trust what we think we heard. ↩︎